环境准备

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

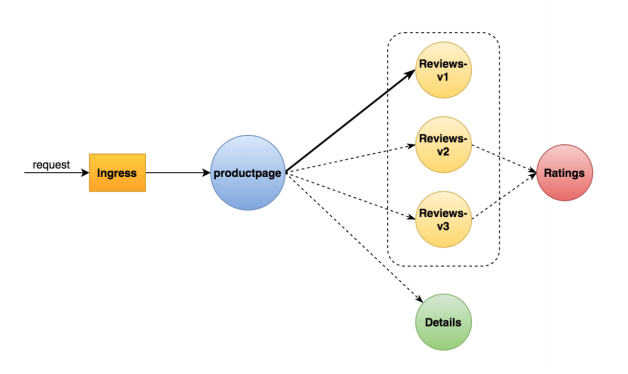

以官方实例中的bookinfo为例

[root@kube-mas ~]# kubectl apply -f istio-1.6.8/samples/bookinfo/platform/kube/ -n test

[root@kube-mas ~]# kubectl get po -n test

NAME READY STATUS RESTARTS AGE

details-v1-558b8b4b76-chjvb 2/2 Running 0 4h3m

details-v2-85d44f95c9-5bdqh 2/2 Running 0 4h3m

mongodb-v1-78d87ffc48-7pgd9 2/2 Running 0 4h3m

mysqldb-v1-6898548c56-rlq5x 2/2 Running 0 4h3m

productpage-v1-6987489c74-lfrw2 2/2 Running 0 4h3m

ratings-v1-7dc98c7588-5dfkw 2/2 Running 0 4h3m

ratings-v2-86f6d54875-gl9zf 2/2 Running 0 4h3m

ratings-v2-mysql-6b684bf9cd-phth6 2/2 Running 0 4h3m

ratings-v2-mysql-vm-7748d7687d-xhg2r 2/2 Running 0 4h3m

reviews-v1-7f99cc4496-9dggd 2/2 Running 0 4h3m

reviews-v2-7d79d5bd5d-qk87n 2/2 Running 0 4h3m

reviews-v3-7dbcdcbc56-kltv4 2/2 Running 0 4h3m

|

配置请求路由

将所有流量都流入微服务的v1版本

编写gateway入口网关配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@kube-mas ~]# mkdir traffic/{gw,dr,vs} -p

[root@kube-mas gw]# cat bookinfo-gw.yaml

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: bookinfo-gw

namespace: test

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "bookinfo.xieys.club"

[root@kube-mas gw]# kubectl apply -f bookinfo-gw.yaml

|

配置productpage的VirtualService

将此virtualService与上面配置的网关进行绑定,这个服务是网关的入口,也就是网站的入口,其路由到后端的productpage的svc里对应的pod的标签为version: v1的pod

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

|

[root@kube-mas vs]# cd

[root@kube-mas ~]# cat traffic/vs/productpage-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: productpage-vs

namespace: test

spec:

gateways:

- bookinfo-gw

- mesh

hosts:

- "productpage.test.svc.cluster.local"

- "bookinfo.xieys.club"

http:

- match:

- uri:

exact: /productpage

- uri:

prefix: /static

- uri:

exact: /login

- uri:

exact: /logout

- uri:

prefix: /api/v1/products

- gateways:

- bookinfo-gw

route:

- destination:

host: "productpage.test.svc.cluster.local"

subset: v1

port:

number: 9080

- match:

- gateways:

- mesh

route:

- destination:

host: "productpage.test.svc.cluster.local"

subset: v1

port:

number: 9080

- route:

- destination:

host: "productpage.test.svc.cluster.local"

subset: v1

port:

number: 9080

[root@kube-mas ~]# kubectl apply -f traffic/vs/productpage-vs.yaml

|

配置productpage这个VirtualService对应的目标规则

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

[root@kube-mas ~]# cat traffic/dr/productpage-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: productpage-dr

namespace: test

spec:

host: productpage.test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

[root@kube-mas ~]# kubectl apply -f traffic/dr/productpage-dr.yaml

|

配置微服务details的VirtualService以及DestinationRule

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

VirtualService将流量分发到details的v1版本

[root@kube-mas ~]# cat traffic/vs/details-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: details-vs

namespace: test

spec:

hosts:

- details.test.svc.cluster.local

http:

- route:

- destination:

host: details.test.svc.cluster.local

subset: v1

[root@kube-mas ~]# kubectl apply -f traffic/vs/details-vs.yaml

[root@kube-mas ~]# cat traffic/dr/details-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: details-dr

namespace: test

spec:

host: "details.test.svc.cluster.local"

subsets:

- name: v1

labels:

version: v1

[root@kube-mas ~]# kubectl apply -f traffic/dr/details-dr.yaml

|

配置微服务ratings的VirtualService以及DestinationRule

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

[root@kube-mas ~]# cat traffic/vs/ratings-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: ratings-vs

namespace: test

spec:

hosts:

- ratings.test.svc.cluster.local

http:

- route:

- destination:

host: ratings.test.svc.cluster.local

subset: v1

[root@kube-mas ~]# kubectl apply -f traffic/vs/ratings-vs.yaml

[root@kube-mas ~]# cat traffic/dr/ratings-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: ratings-dr

namespace: test

spec:

host: ratings.test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

[root@kube-mas ~]# kubectl apply -f traffic/dr/ratings-dr.yaml

|

配置微服务reviews的VirtualService以及DestinationRule

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

[root@kube-mas ~]# cat traffic/vs/reviews-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews-vs

namespace: test

spec:

hosts:

- reviews.test.svc.cluster.local

http:

- route:

- destination:

host: reviews.test.svc.cluster.local

subset: v1

[root@kube-mas ~]# kubectl apply -f traffic/vs/reviews-vs.yaml

[root@kube-mas ~]# cat traffic/dr/reviews-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: reviews-dr

namespace: test

spec:

host: reviews.test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

[root@kube-mas ~]# kubectl apply -f traffic/dr/reviews-dr.yaml

|

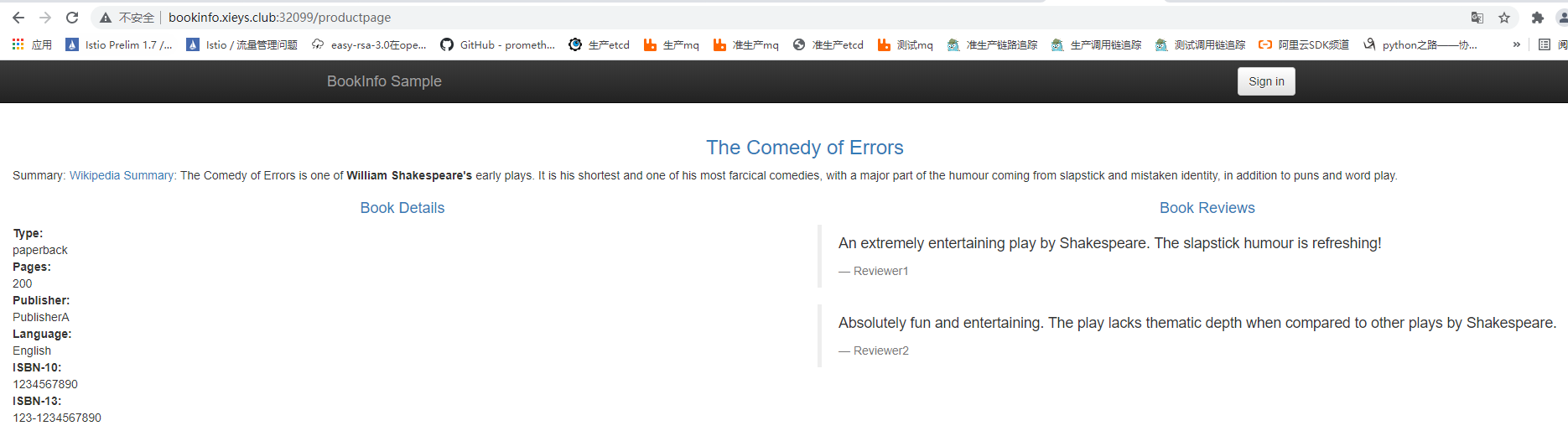

全部写完之后,访问页面,通过日志可以发现所有的微服务版本都是v1版本才有访问日志

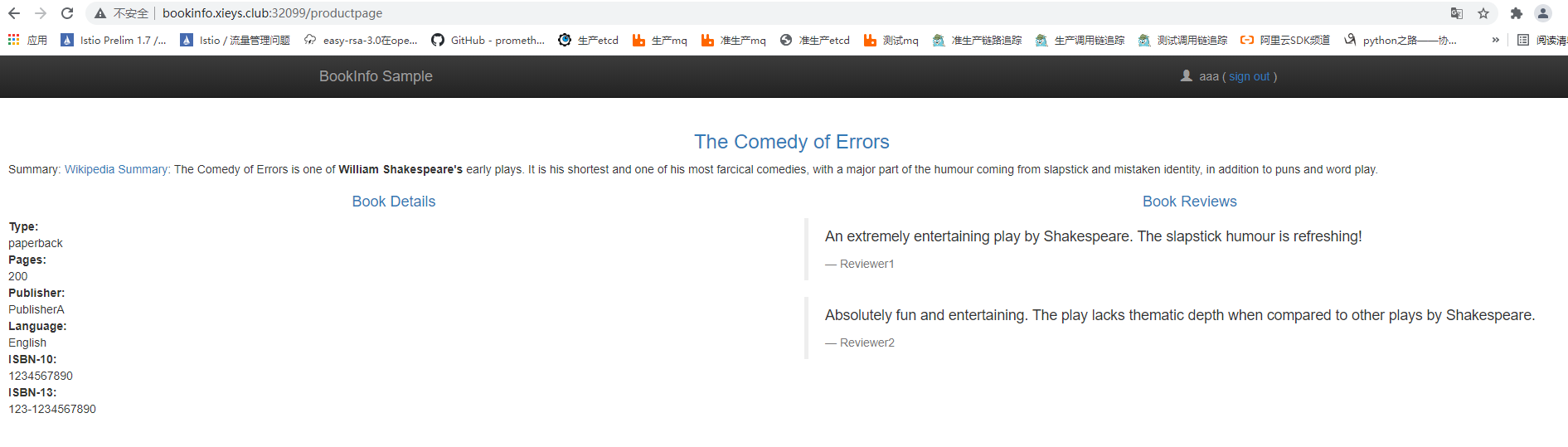

配置基于条件的路由规则

需求:想把特定用户的所有流量路由到特定的服务版本,比如:用户为xieys的用户的所有流量都路由到服务reviews对应的v2版本中

需要注意:istio对用户身份没有任何特殊的内置机制,实际上,productpage的所有服务在所有到reviews服务的http请求头中都添加了一个自定义的end-user请求头(这个完全是在程序中实现的),从而达到本例的效果

reviews:v2 是包含星级平分功能的版本(显示颜色:黑色)

修改reviews的VirtualService的配置文件,添加路由条件,如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

[root@kube-mas ~]# cat traffic/vs/reviews-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews-vs

namespace: test

spec:

hosts:

- reviews.test.svc.cluster.local

http:

- match:

- headers:

end-user:

exact: xieys

route:

- destination:

host: reviews.test.svc.cluster.local

subset: v2

- route:

- destination:

host: reviews.test.svc.cluster.local

subset: v1

添加匹配条件,以及符合条件的路由

[root@kube-mas ~]# kubectl apply -f traffic/vs/reviews-vs.yaml

|

修改VirtualService对应的目标规则,添加subset v2

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[root@kube-mas ~]# cat traffic/dr/reviews-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: reviews-dr

namespace: test

spec:

host: reviews.test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

[root@kube-mas ~]# kubectl apply -f traffic/dr/reviews-dr.yaml

|

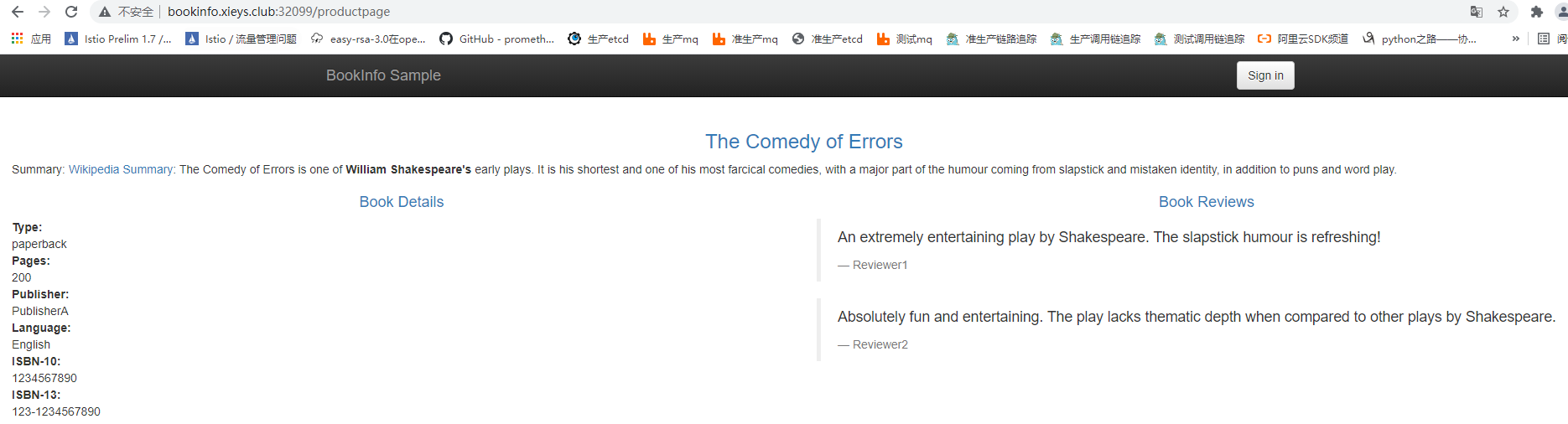

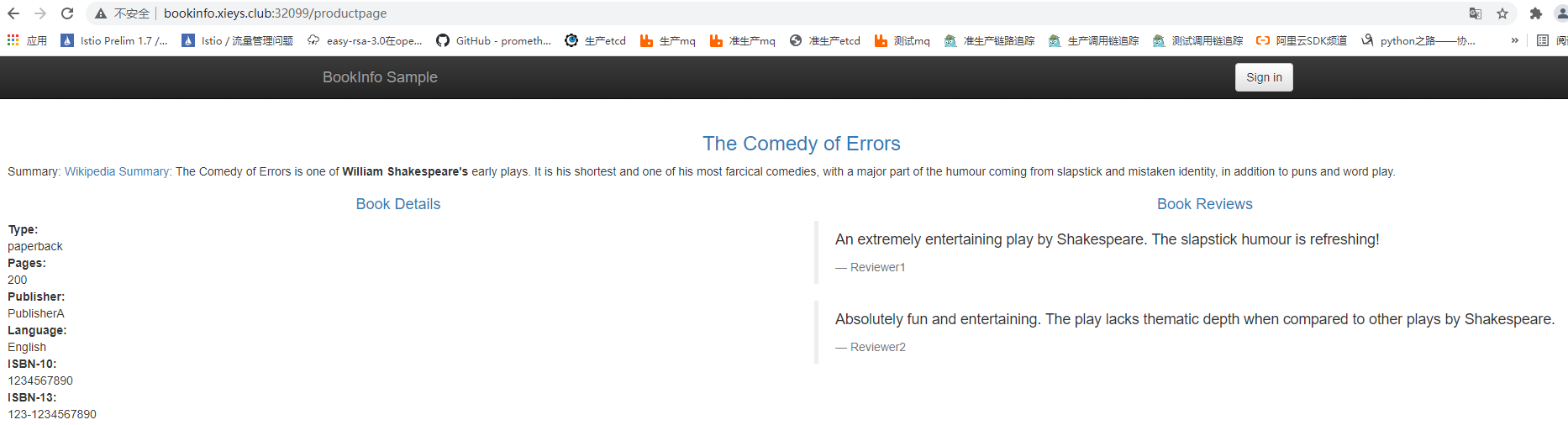

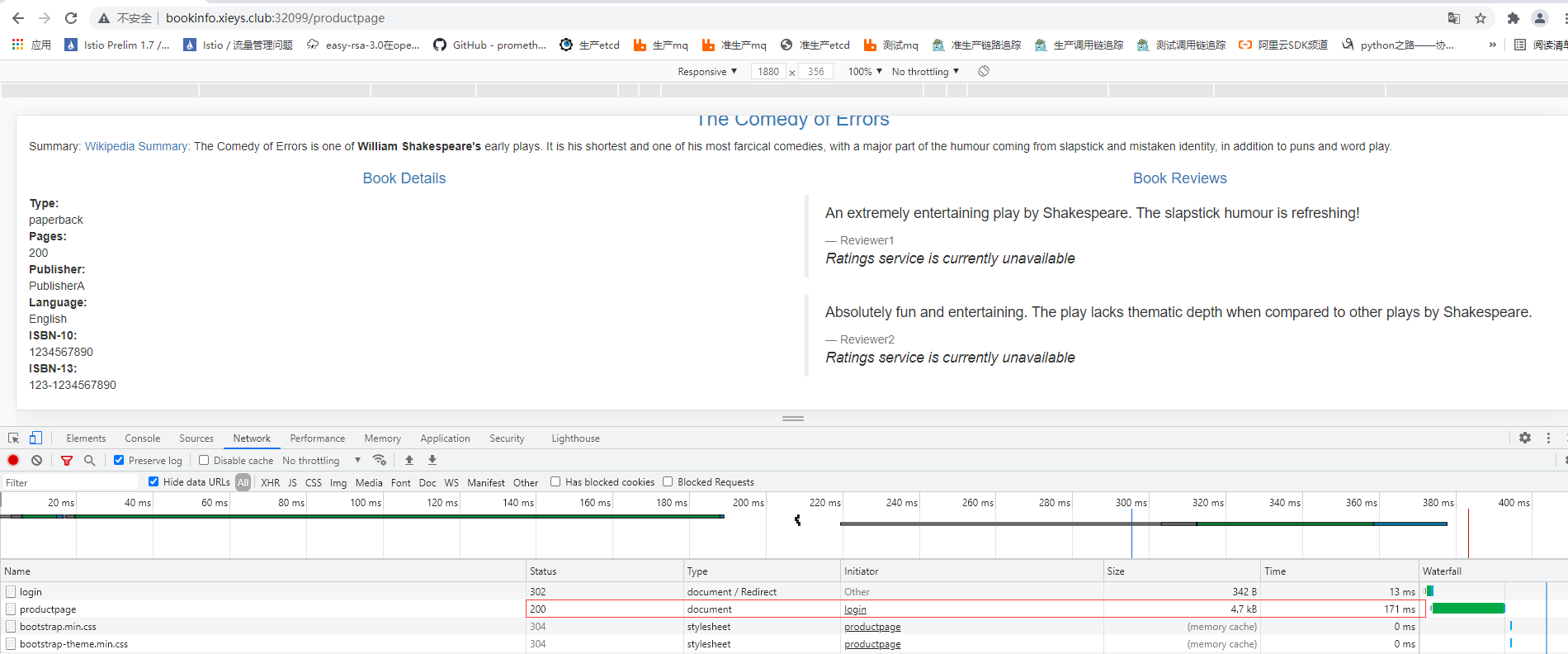

未登录前效果

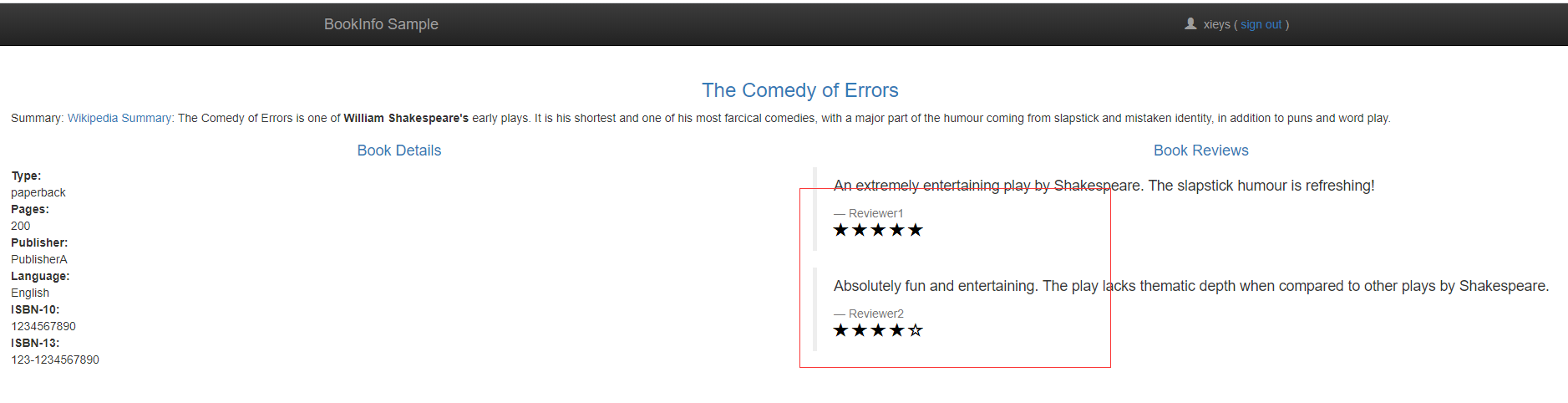

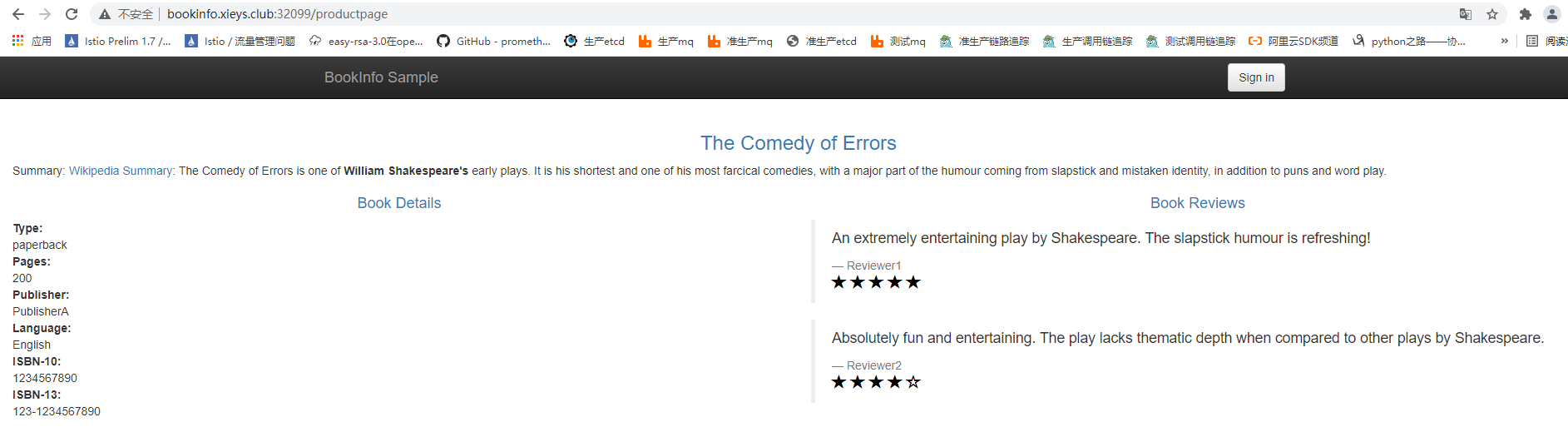

使用xieys用户登陆后效果,这里登陆只需要输用户名即可

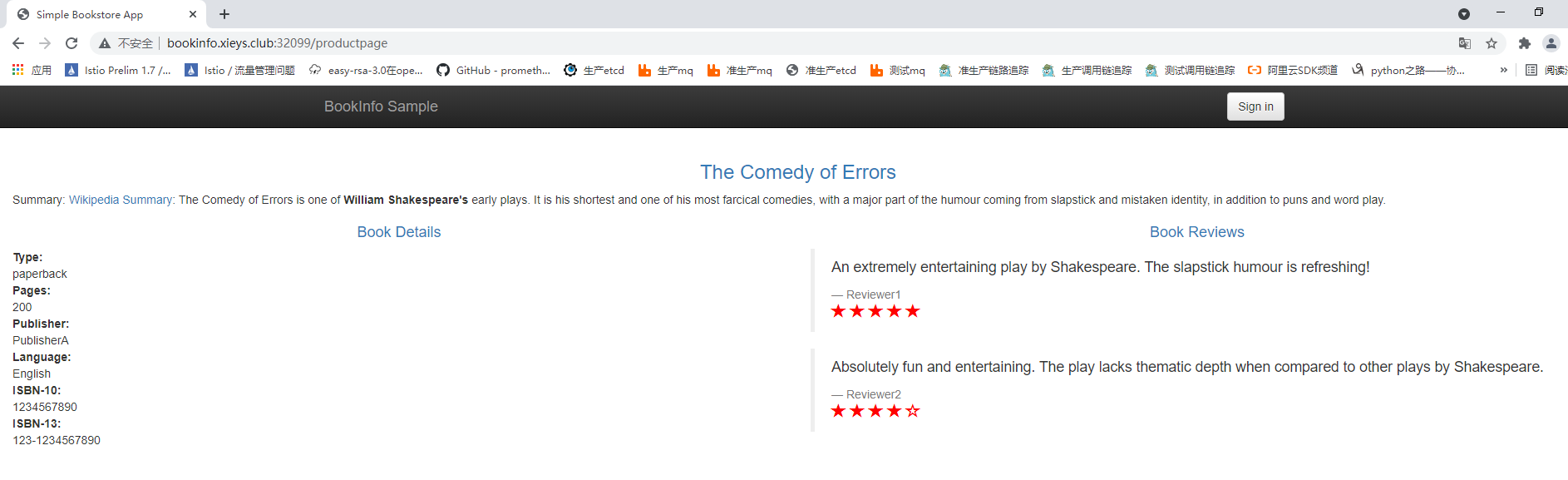

金丝雀部署案例

以bookinfo里面的review为例

修改reviews的VirtualService的配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

[root@kube-mas ~]# cat traffic/vs/reviews-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews-vs

namespace: test

spec:

hosts:

- reviews.test.svc.cluster.local

http:

- match:

- headers:

end-user:

exact: xieys

route:

- destination:

host: reviews.test.svc.cluster.local

subset: v3

- route:

- destination:

host: reviews.test.svc.cluster.local

subset: v1

weight: 80

- destination:

host: reviews.test.svc.cluster.local

subset: v2

weight: 20

[root@kube-mas ~]# kubectl apply -f traffic/vs/reviews-vs.yaml

|

- 当满足匹配条件 ,请求头

end-user=xieys时,将路由到reviews的v3版本。(星级平分:颜色显示为红色)

- 不满足匹配条件,访问reviews服务的时候,将80%的流量分发给v2版本,将20%的流量分发给v1版本

修改reviews的DestinationRule的配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

[root@kube-mas ~]# cat traffic/dr/reviews-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: reviews-dr

namespace: test

spec:

host: reviews.test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

- name: v3

labels:

version: v3

[root@kube-mas ~]# kubectl apply -f traffic/dr/reviews-dr.yaml

|

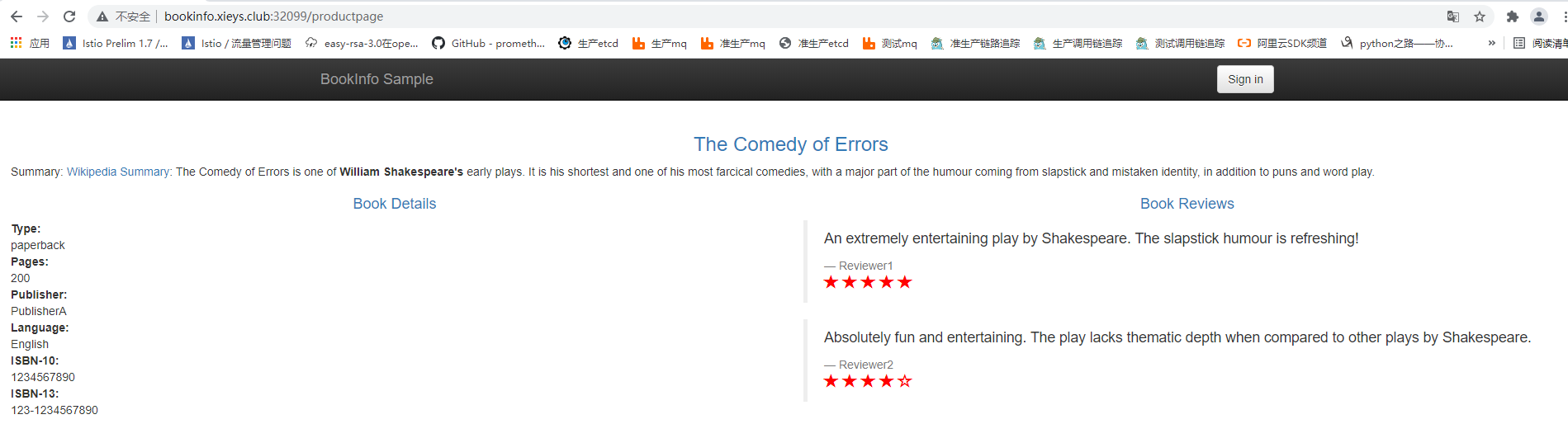

效果

没有用xieys登陆之前,访问10次,大概有8次效果如下:

另外两次的效果如下:

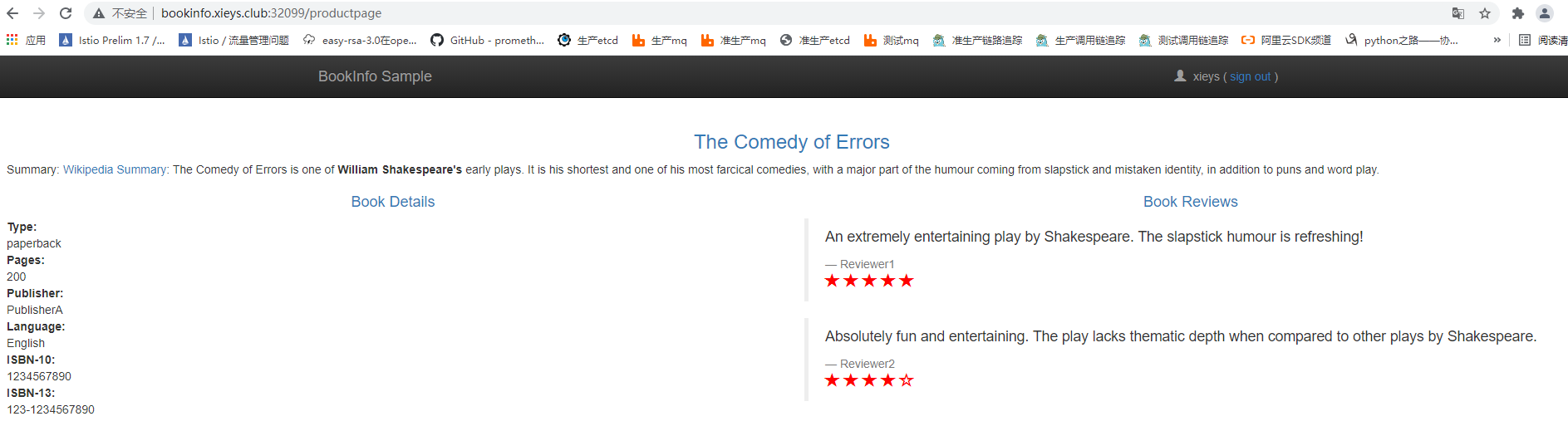

使用xieys登陆后的效果如下:

在v1到v2版本切换的时候,我们可以通过weight这个来设置流量的切换,然后慢慢的切换到v2版本

故障注入

基于HTTP延迟故障注入

官方实例bookinfo微服务请求流程如下:

Productpage -> reviews:v1 (其他用户)

Productpage -> reviews:v2 -> ratings (针对指定用户)

为了测试微服务应用程序bookinfo的弹性,我们为用户xieys在reviews:v2到ratings服务之间注入一个3s的延迟时间。这个测试将会发现一个故意引入bookinfo应用程序的bug。

因为reviews:v2服务对ratings服务的调用具有3s的硬编码连接超时(程序写死的)。但是在productpage和reviews服务之间也有一个3s的硬编码的超时(程序写死的),再加上一次重试,一共6s。因此,尽管注入了3s的延迟,我们仍然期望端到端的流程是没有任何错误的。

但是最终的结果却是,productpage对reviews的调用在将近3s后提前超时并抛出错误

修改ratings的VirtualService配置文件,注入延迟

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

[root@kube-mas ~]# cat traffic/vs/ratings-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: ratings-vs

namespace: test

spec:

hosts:

- ratings.test.svc.cluster.local

http:

- fault: #应用于客户端http流量的故障注入策略

delay: # 延迟

fixedDelay: 3s #在转发请求之前添加固定延迟

percentage: #注入延迟的百分比

value: 100

match:

- headers:

end-user:

exact: xieys

route:

- destination:

host: ratings.test.svc.cluster.local

subset: v1

- route:

- destination:

host: ratings.test.svc.cluster.local

subset: v1

[root@kube-mas ~]# kubectl apply -f traffic/vs/ratings-vs.yaml

|

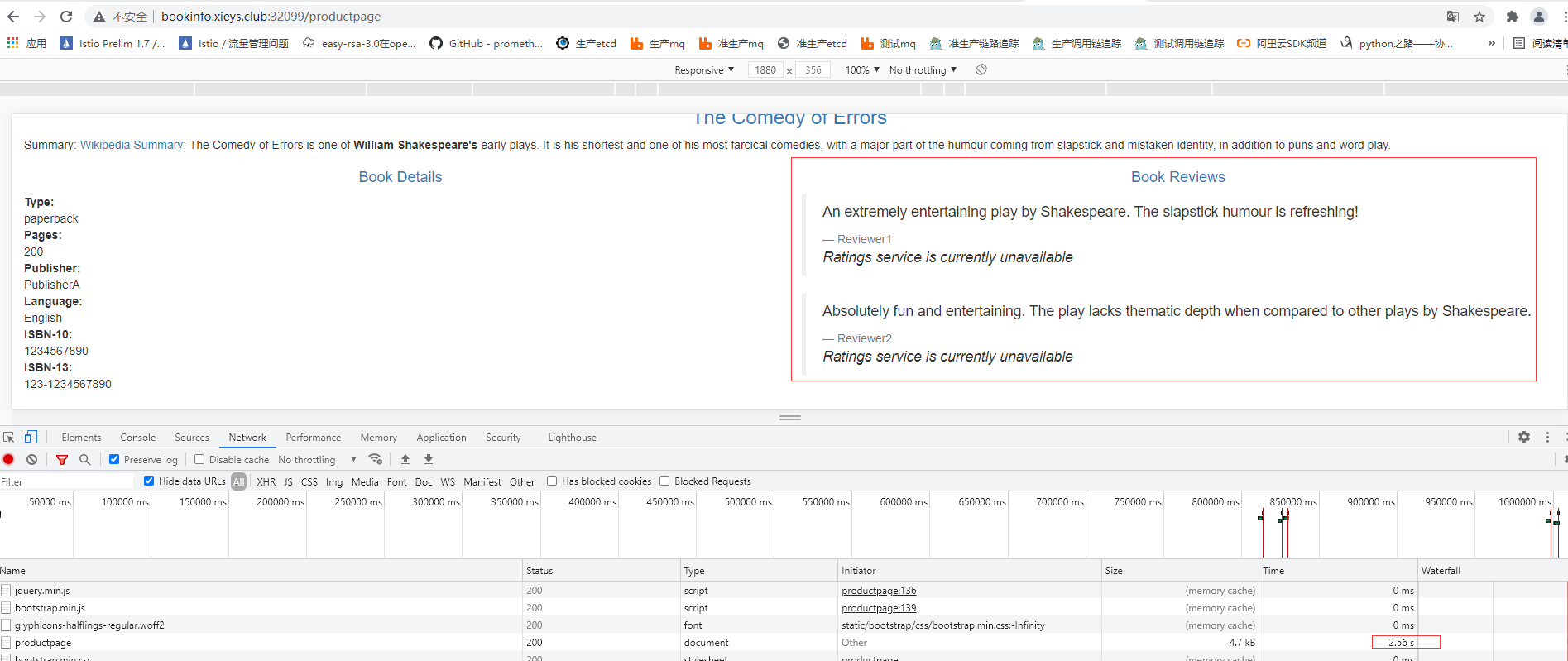

测试延迟配置

- 通过浏览器打开bookinfo应用

- 使用用户xieys登陆到/productpage页面

这时候会发现大约会卡住3s时间,但是并没有报错。而是出现了一个问题:Reviews部分显示了错误信息

基于Http Abort故障的注入

测试微服务弹性的另一种方法就是引入HTTP Abort(中文意思:中止)故障。将给定ratings微服务为测试用户xieys引入一个HTTP Abort的故障。

在这种情况下,我们希望页面能立即加载出来。

修改ratings的VirtualService配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

[root@kube-mas ~]# cat traffic/vs/ratings-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: ratings-vs

namespace: test

spec:

hosts:

- ratings.test.svc.cluster.local

http:

- fault: #应用于客户端http流量的故障注入策略

abort: # 中止

httpStatus: 500 #用于中止HTTP请求的HTTP状态码

percentage: #注入延迟的百分比

value: 100

match:

- headers:

end-user:

exact: xieys

route:

- destination:

host: ratings.test.svc.cluster.local

subset: v1

- route:

- destination:

host: ratings.test.svc.cluster.local

subset: v1

[root@kube-mas ~]# kubectl apply -f traffic/vs/ratings-vs.yaml

|

测试中止配置

- 用浏览器打开bookinfo应用

- 使用用户xieys登陆到/productpage页面,此时页面加载立即会看到如下效果:

如果注销用户xieys使用其他用户或在匿名窗口中打开bookinfo应用程序,都不会看到任何错误。因为其他用户都是调用了reviews:v1(完全不会调用ratings)

流量转移

HTTP应用的流量转移

怎么逐步将流量从一个版本的微服务迁移到另一个版本?

在istio中,可以通过配置一系列规则来实现此目标,这些规则将一定百分比的流量路由到一个或另一个服务。例如:我们把50%的流量发送到reviews:v1,另外50%的流量发送到reviews:v3,然后再把100%的流量发送到reviews:v3来完成迁移

修改reviews的VirtualService配置文件,添加路由规则,并配置权重

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

[root@kube-mas ~]# cat traffic/vs/reviews-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews-vs

namespace: test

spec:

hosts:

- reviews.test.svc.cluster.local

http:

- route:

- destination:

host: reviews.test.svc.cluster.local

subset: v1

weight: 50

- destination:

host: reviews.test.svc.cluster.local

subset: v3

weight: 50

[root@kube-mas ~]# kubectl apply -f traffic/vs/reviews-vs.yaml

|

添加reviews的DestinationRule目标规则

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

[root@kube-mas ~]# cat traffic/dr/reviews-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: reviews-dr

namespace: test

spec:

host: reviews.test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

- name: v3

labels:

version: v3

[root@kube-mas ~]# kubectl apply -f traffic/dr/reviews-dr.yaml

|

验证

浏览器打开/productpage页面,可以发现有50%的访问的会是这样

另外50%的访问会这样

将reviews:v3 版本的流量更改为100%

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

[root@kube-mas ~]# cat traffic/vs/reviews-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews-vs

namespace: test

spec:

hosts:

- reviews.test.svc.cluster.local

http:

- route:

- destination:

host: reviews.test.svc.cluster.local

subset: v1

weight: 0

- destination:

host: reviews.test.svc.cluster.local

subset: v3

weight: 100

[root@kube-mas ~]# kubectl apply -f traffic/vs/reviews-vs.yaml

|

验证

可以发现,现在每次访问都是访问的reviews的v3版本,如下:

TCP应用的流量转移

如何逐步将tcp流量从微服务的一个版本迁移到另一个版本。实现方式跟http应用流量转移的方式差不多。

以官方示例 tcp-echo 为例

部署微服务tcp-echo 的所有版本v1和v2

1

|

[root@kube-mas ~]# kubectl apply -f istio-1.6.8/samples/tcp-echo/tcp-echo-services.yaml -n test

|

Gateway编写以及应用

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@kube-mas ~]# cat traffic/gw/tcp-echo-gw.yaml

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: tcp-echo-gw

namespace: test

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 31400

name: tcp

protocol: TCP

hosts:

- 192.168.116.15

[root@kube-mas ~]# kubectl apply -f traffic/gw/tcp-echo-gw.yaml

|

VirtualService的编写以及应用将流量全部路由到v1版本

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

[root@kube-mas ~]# cat traffic/vs/tcp-echo-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: tcp-echo-v2

namespace: test

spec:

hosts:

- "192.168.116.15"

- "tcp-echo.test.svc.cluster.local"

gateways:

- tcp-echo-gw

- mesh

tcp:

- match:

- port: 31400

- gateways:

- tcp-echo-gw

route:

- destination:

host: tcp-echo.test.svc.cluster.local

subset: v1

port:

number: 9000

- match:

- gateways:

- mesh

route:

- destination:

host: tcp-echo.test.svc.cluster.local

subset: v1

port:

number: 9000

[root@kube-mas ~]# kubectl apply -f traffic/vs/tcp-echo-vs.yaml

|

DestinationRule的编写以及应用

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@kube-mas ~]# cat traffic/dr/tcp-echo-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: tcp-echo-dr

namespace: test

spec:

host: tcp-echo.test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

[root@kube-mas ~]# kubectl apply -f traffic/dr/tcp-echo-dr.yaml

|

进行流量测试

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

查看当前ingress的tcp端口

[root@kube-mas ~]# kubectl get svc -n istio-system | grep gateway

istio-egressgateway ClusterIP 10.96.138.204 <none> 80/TCP,443/TCP,15443/TCP 42h

istio-ingressgateway NodePort 10.96.61.36 <none> 15021:30167/TCP,80:32099/TCP,443:32266/TCP,31400:30597/TCP,15443:31792/TCP 42h

安装nc工具

[root@kube-mas ~]# yum -y install [root@kube-mas ~]# kubectl get svc -n istio-system | grep gateway

[root@kube-mas ~]# for i in {1..10};do (date;sleep 1) | nc 192.168.116.15 30597;done

one Sat May 29 11:23:30 CST 2021

one Sat May 29 11:23:31 CST 2021

one Sat May 29 11:23:32 CST 2021

one Sat May 29 11:23:33 CST 2021

one Sat May 29 11:23:34 CST 2021

one Sat May 29 11:23:35 CST 2021

one Sat May 29 11:23:36 CST 2021

one Sat May 29 11:23:37 CST 2021

one Sat May 29 11:23:38 CST 2021

one Sat May 29 11:23:39 CST 2021

可以看到现在都是在v1版本里,输出one + 时间

|

将30%的流量从tcp-echo:v1转移到tcp-echo:v2

- 修改VirtualService,在默认路由中添加一条目标路由v2,并设置权重

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

|

[root@kube-mas ~]# cat traffic/vs/tcp-echo-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: tcp-echo-v2

namespace: test

spec:

hosts:

- "192.168.116.15"

- "tcp-echo.test.svc.cluster.local"

gateways:

- tcp-echo-gw

- mesh

tcp:

- match:

- port: 31400

- gateways:

- tcp-echo-gw

route:

- destination:

host: tcp-echo.test.svc.cluster.local

subset: v1

port:

number: 9000

weight: 70

- destination:

host: tcp-echo.test.svc.cluster.local

subset: v2

port:

number: 9000

weight: 30

- match:

- gateways:

- mesh

route:

- destination:

host: tcp-echo.test.svc.cluster.local

subset: v1

port:

number: 9000

weight: 70

- destination:

host: tcp-echo.test.svc.cluster.local

subset: v2

port:

number: 9000

weight: 30

[root@kube-mas ~]# kubectl apply -f traffic/vs/tcp-echo-vs.yaml

|

- 修改DestinationRule,添加V2版本的路由子集

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[root@kube-mas ~]# cat traffic/dr/tcp-echo-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: tcp-echo-dr

namespace: test

spec:

host: tcp-echo.test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

[root@kube-mas ~]# kubectl apply -f traffic/dr/tcp-echo-dr.yaml

|

测试

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@kube-mas ~]# for i in {1..10};do (date;sleep 1) | nc 192.168.116.15 30597;done

one Sat May 29 11:29:54 CST 2021

two Sat May 29 11:29:55 CST 2021

one Sat May 29 11:29:56 CST 2021

one Sat May 29 11:29:57 CST 2021

one Sat May 29 11:29:58 CST 2021

one Sat May 29 11:29:59 CST 2021

two Sat May 29 11:30:00 CST 2021

one Sat May 29 11:30:01 CST 2021

one Sat May 29 11:30:02 CST 2021

two Sat May 29 11:30:03 CST 2021

可以看到有30%的流量流入了v2版本,等v2版本稳定后,可以将所有的流量全部转移到v2版本

|

设置请求超时

http请求超时可以用路由规则的timeout字段来指定,默认情况下,超时是禁用的。

下面我们以官方示例 httpbin 来举例

环境准备

1

|

[root@kube-mas ~]# kubectl apply -f istio-1.6.8/samples/httpbin/httpbin.yaml

|

配置httpbin的Gateway

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@kube-mas ~]# cat traffic/gw/http-bin-gw.yaml

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: httpbin-gw

namespace: test

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "httpbin.xieys.club"

[root@kube-mas ~]# kubectl apply -f traffic/gw/http-bin-gw.yaml

|

配置httpbin的VirtualService并设置请求超时

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

[root@kube-mas ~]# cat traffic/vs/httpbin-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: httpbin-vs

namespace: test

spec:

hosts:

- httpbin.test.svc.cluster.local

- httpbin.xieys.club

gateways:

- httpbin-gw

- mesh

http:

- route:

- destination:

host: httpbin.test.svc.cluster.local

port:

number: 8000

timeout: 3s

[root@kube-mas ~]# kubectl apply -f traffic/vs/httpbin-vs.yaml

|

这里的路由并没有子集,所以并不需要设置路由条目

测试

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

不想去添加dns解析记录,就直接用如下方式测试了 /delay/2 为延迟2s,所以返回正常

[root@kube-mas ~]# curl httpbin.xieys.club/delay/2 -x 192.168.116.15:32099

{

"args": {},

"data": "",

"files": {},

"form": {},

"headers": {

"Accept": "*/*",

"Content-Length": "0",

"Host": "httpbin.xieys.club",

"User-Agent": "curl/7.29.0",

"X-B3-Parentspanid": "ad6dd8c4391a5a88",

"X-B3-Sampled": "1",

"X-B3-Spanid": "b9fed608b2b5ae7c",

"X-B3-Traceid": "88ee9152124625e1ad6dd8c4391a5a88",

"X-Envoy-Expected-Rq-Timeout-Ms": "3000",

"X-Envoy-Internal": "true",

"X-Forwarded-Client-Cert": "By=spiffe://cluster.local/ns/test/sa/httpbin;Hash=eb9955714cc3fbdac732639ccece084ddcafbd49179d4a5deae8ae056bdf8c33;Subject=\"\";URI=spiffe://cluster.local/ns/istio-system/sa/istio-ingressgateway-service-account"

},

"origin": "10.244.0.0",

"url": "http://httpbin.xieys.club/delay/2"

}

把/delay/2 改为 /dalay/3再次测试,出现了请求超时的错误

[root@kube-mas ~]# curl httpbin.xieys.club/delay/3 -x 192.168.116.15:32099

upstream request timeout

|

设置重试机制

重设设置指定如果初始调用失败,envoy代理尝试连接服务的最大次数。通过确保调用不会因为临时过载的服务或网络等问题而永久失败,重试可以提高服务可用性和应用程序的性能。重试之间的间隔(25ms+) 是可变的,并由istio自动确定,从而防止被调用服务被请求淹没。HTTP请求的默认重试行为是再返回错误之前重试2次。

与超时一样,istio的默认重试行为在延迟方面可能不适合你的应用程序需求(对失败的服务进行过多的重试会降低速度或可用性)。可以再虚拟服务中按服务调整重试设置,而不必修改业务代码。还可以通过添加每次重试的超时来进一步细化重试行为,并指定每次重试都试图成功连接到服务所等待的时间量。

示例,还是以httpbin为例

修改httpbin服务的VirtualService配置,添加重试机制

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

[root@kube-mas ~]# cat traffic/vs/httpbin-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: httpbin-vs

namespace: test

spec:

hosts:

- httpbin.test.svc.cluster.local

- httpbin.xieys.club

gateways:

- httpbin-gw

- mesh

http:

- route:

- destination:

host: httpbin.test.svc.cluster.local

port:

number: 8000

retries:

attempts: 3

perTryTimeout: 4s

retryOn: "gateway-error"

[root@kube-mas ~]# kubectl apply -f traffic/vs/httpbin-vs.yaml

|

参数解释

- retries参数 可以定义请求失败时的策略,重试策略包括重试次数、超时、重试条件

- attempts 必选字段,定义重试的次数

- perTryTimeout 单次重试超时的时间,单位可以是ms、s、m和h

- retryOn 重试的条件,可以是多个条件,以逗号分隔

retryOn 参数

| 值 |

含义 |

| 5xx |

在上游服务返回5xx应答码,或者在没有返回时重试 |

| gateway-error |

类似于5xx异常,只对502、503、504应答码进行重试 |

| connect-failure |

在连接上游服务失败时重试 |

| retriable-4xx |

在上游服务返回可重试的4xx应答码时执行重试 |

| refused-stream |

在上游服务使用refused_stream错误码重置时执行重试 |

| cancelled |

grp应答的header中状态码是cancelled时进行重试 |

| deadline-exceeded |

在grpc应答的header中状态码是deadline-exceeded时执行重试 |

| internal |

在grpc应答的header的状态码是internal时执行重试 |

| resource-exhausted |

在grpc应答的header中状态码是resource-exhausted时执行重试 |

| unavailable |

在grpc应答的header中状态码是unavailable时执行重试 |

测试

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

[root@kube-mas ~]# time curl -I httpbin.xieys.club/delay/5 -x 192.168.116.15:32099

HTTP/1.1 504 Gateway Timeout

content-length: 24

content-type: text/plain

date: Sat, 29 May 2021 07:51:24 GMT

server: istio-envoy

real 0m16.120s

user 0m0.003s

sys 0m0.004s

查看httpbin的日志

[root@kube-mas ~]# kubectl logs -n test -c istio-proxy httpbin-66cdbdb6c5-ndw42 -f

[2021-05-29T07:51:08.997Z] "HEAD /delay/5 HTTP/1.1" 0 DC "-" "-" 0 0 4001 - "10.244.0.0" "curl/7.29.0" "c545c697-743b-92c1-a191-554add8aefc6" "httpbin.xieys.club" "127.0.0.1:80" inbound|8000|http|httpbin.test.svc.cluster.local 127.0.0.1:48670 10.244.1.163:80 10.244.0.0:0 outbound_.8000_._.httpbin.test.svc.cluster.local default

[2021-05-29T07:51:13.018Z] "HEAD /delay/5 HTTP/1.1" 0 DC "-" "-" 0 0 4001 - "10.244.0.0" "curl/7.29.0" "c545c697-743b-92c1-a191-554add8aefc6" "httpbin.xieys.club" "127.0.0.1:80" inbound|8000|http|httpbin.test.svc.cluster.local 127.0.0.1:48780 10.244.1.163:80 10.244.0.0:0 outbound_.8000_._.httpbin.test.svc.cluster.local default

[2021-05-29T07:51:17.024Z] "HEAD /delay/5 HTTP/1.1" 0 DC "-" "-" 0 0 4002 - "10.244.0.0" "curl/7.29.0" "c545c697-743b-92c1-a191-554add8aefc6" "httpbin.xieys.club" "127.0.0.1:80" inbound|8000|http|httpbin.test.svc.cluster.local 127.0.0.1:48882 10.244.1.163:80 10.244.0.0:0 outbound_.8000_._.httpbin.test.svc.cluster.local default

[2021-05-29T07:51:21.105Z] "HEAD /delay/5 HTTP/1.1" 0 DC "-" "-" 0 0 4001 - "10.244.0.0" "curl/7.29.0" "c545c697-743b-92c1-a191-554add8aefc6" "httpbin.xieys.club" "127.0.0.1:80" inbound|8000|http|httpbin.test.svc.cluster.local 127.0.0.1:48990 10.244.1.163:80 10.244.0.0:0 outbound_.8000_._.httpbin.test.svc.cluster.local default

可以发现一次请求内,重试了3次,重试超时时间为4s,所以是每4秒会像/delay/5发送一次请求

|

思考

可以设置请求超时,但是重试也有个超时容忍时间,那么两者是如何协调的呢?

测试1,设置每次重试的超时容忍时间为1s,而总体的超时时间为7s

熔断

熔断器是Istio为创建具有弹性的微服务应用提供的另一个有用的机制。在熔断器中,设置一个对服务中的单个主机调用的限制,例如并发连接的数量或对该主机调用失败的次数,一旦限制被触发,熔断器就会跳闸并停止连接到该主机。使用熔断模式可以快速失败而不必让客户端尝试连接过载或有故障的主机。

熔断适用于在负载均衡池中的“真实”网络目标地址,你可以在目标规则中配置熔断器阈值,让配置适用于服务中的每个主机。

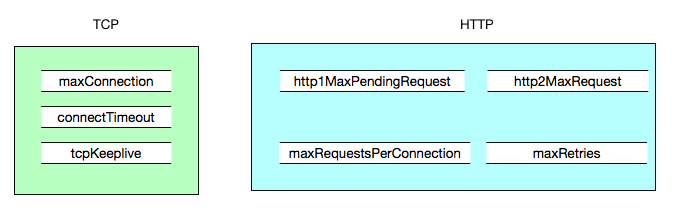

在istio中,关于熔断在DestinationRule -> TrafficPolicy 中提供了2个方面的配置:

- ConnectionPool (连接池 TCP | HTTP 配置,例如:连接数,并发请求等),其中ConnectionPool连接池检测主要配置项包括

HTTP连接池配置和TCP连接设置配合使用,

| Istio配置 |

Envoy配置 |

含义 |

备注 |

| maxConnection |

cluster.circuit_breakers.max_connections |

Envoy为上游集群建立的最大连接数 |

|

| http1MaxPendingRequests |

cluster.circuit_breakers.max_pending_requests |

最大等待HTTP请求数,默认1024 |

如果超过,cluster的upstream_rq_pending_overflow计数器增加 |

| http2MaxRequests |

cluster.circuit_breaker.maxRequests |

HTTP2最大连接数 |

仅适用HTTP2, HTTP1由maxConnection控制。如果超出,cluster的upstream_rq_pending_overflow计数器增加,可以由stat查看 |

| maxRetries |

cluster.circuit_breaker.max_retries |

最大重试次数 |

在指定时间内,集群所有主机能够执行的最大重试次数。如果超出,cluster的upstream_rq_retry_overflow计数器增加 |

| connectionTimeOut |

cluster.connection_timeout_ms |

cluster.connection_timeout_ms |

连接超时时间 |

| maxRequestsPerConnection |

cluster.max_request_per_connection |

每个连接最大请求数 |

|

- outlierDetection(异常检测配置,传统意义上的熔断配置,即对规定时间内服务错误数的检测),其中outlierDetection异常点检测主要的配置项包括:

- consecuitiveErrors: 连续错误次数。对于HTTP服务,502、503、504会被认为异常,TCP服务,连接超时即异常

- intervals: 驱逐的时间间隔,默认是10秒

- baseEjectionTime: 最小驱逐时间。驱逐时间会随着错误次数增加而增加。即错误次数 * 最小驱逐时间

- maxEjectionPercent: 负载均衡池中可以被驱逐的实例的最大比例。以免某个接口瞬时不可用,导致太多实例被驱逐,进而导致服务整体全部不可用。

- minHealthPercent: 最小健康服务百分比,通常 maxEjectionPercent + minHealthPercent <= 100 %

示例 以官方的示例httpbin程序为例

1

|

[root@kube-mas ~]# kubectl apply -f istio-1.6.8/samples/httpbin/httpbin.yaml -n test

|

创建目标规则并配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

[root@kube-mas ~]# cat traffic/dr/httpbin-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: httpbin-dr

namespace: test

spec:

host: httpbin.test.svc.cluster.local

trafficPolicy: #配置流量规则

connectionPool: # 连接池

tcp: #tcp请求

maxConnections: 1 # 最大请求数为1

http: # http请求

http1MaxPendingRequests: 1 #最大等待的http请求为1

outlierDetection: #异常检测配置

consecutiveErrors: 1 #连接错误的次数为1

interval: 1s #驱逐间隔时间1s

baseEjectionTime: 3m #最小驱逐时间3m

maxEjectionPercent: 100 #负载均衡可以被驱逐的实例的最大比例为100%

[root@kube-mas ~]# kubectl apply -f traffic/dr/httpbin-dr.yaml

|

测试工具 Fortio介绍

Fortio是一个快速,小型(3Mb docker映像,具有最小的依赖性),可重用,可嵌入的go库以及命令行工具和服务器进程,该服务器包括简单的Web UI和结果的图形表示(均为单个延迟图)以及多重结果比较最小,最大,平均,qps和百分位图。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

|

部署

[root@kube-mas ~]# wget https://github.com/fortio/fortio/releases/download/v1.4.4/fortio-1.4.4-1.x86_64.rpm

[root@kube-mas ~]# rpm -ivh fortio-1.4.4-1.x86_64.rpm

命令

[root@kube-mas ~]# fortio load -c 5 -n 20 -qps 0 http://bookinfo.xieys.club:32099

Fortio 1.4.4 running at 0 queries per second, 2->2 procs, for 20 calls: http://bookinfo.xieys.club:32099

13:54:47 I httprunner.go:82> Starting http test for http://bookinfo.xieys.club:32099 with 5 threads at -1.0 qps

Starting at max qps with 5 thread(s) [gomax 2] for exactly 20 calls (4 per thread + 0)

13:54:48 I periodic.go:543> T003 ended after 581.723306ms : 4 calls. qps=6.876121274054645

13:54:48 I periodic.go:543> T001 ended after 587.833605ms : 4 calls. qps=6.8046466992985195

13:54:48 I periodic.go:543> T002 ended after 627.022631ms : 4 calls. qps=6.379355069881043

13:54:48 I periodic.go:543> T004 ended after 680.498568ms : 4 calls. qps=5.878043229034392

13:54:48 I periodic.go:543> T000 ended after 693.412563ms : 4 calls. qps=5.768571574034202

Ended after 693.480076ms : 20 calls. qps=28.84

Aggregated Function Time : count 20 avg 0.15845835 +/- 0.1487 min 0.01533095 max 0.416356761 sum 3.16916693

# range, mid point, percentile, count

>= 0.0153309 <= 0.016 , 0.0156655 , 5.00, 1

> 0.045 <= 0.05 , 0.0475 , 20.00, 3

> 0.05 <= 0.06 , 0.055 , 30.00, 2

> 0.06 <= 0.07 , 0.065 , 35.00, 1

> 0.07 <= 0.08 , 0.075 , 45.00, 2

> 0.08 <= 0.09 , 0.085 , 55.00, 2

> 0.09 <= 0.1 , 0.095 , 60.00, 1

> 0.1 <= 0.12 , 0.11 , 65.00, 1

> 0.12 <= 0.14 , 0.13 , 75.00, 2

> 0.4 <= 0.416357 , 0.408178 , 100.00, 5

# target 50% 0.085

# target 75% 0.14

# target 90% 0.409814

# target 99% 0.415702

# target 99.9% 0.416291

Sockets used: 5 (for perfect keepalive, would be 5)

Jitter: false

Code 200 : 20 (100.0 %)

Response Header Sizes : count 20 avg 174.3 +/- 0.5568 min 173 max 175 sum 3486

Response Body/Total Sizes : count 20 avg 1857.3 +/- 0.5568 min 1856 max 1858 sum 37146

All done 20 calls (plus 0 warmup) 158.458 ms avg, 28.8 qps

参数解释:

-c 表示并发数

-n 一共多少个请求

-qps 每秒查询数,0表示不限制

同时此工具还会带有web图形界面

[root@kube-mas ~]# fortio server

Fortio 1.4.4 grpc 'ping' server listening on [::]:8079

Fortio 1.4.4 https redirector server listening on [::]:8081

Fortio 1.4.4 echo server listening on [::]:8080

Data directory is /root

UI started - visit:

http://localhost:8080/fortio/

(or any host/ip reachable on this server)

13:56:12 I fortio_main.go:214> All fortio 1.4.4 2020-07-14 22:10 5840a17ec92d01f2969f354cabf9615339eb8286 go1.14.4 servers started!

通过 http://IP:8080/fortio可访问网页控制台

|

在网格内添加一个客户端服务

1

|

[root@kube-mas ~]# kubectl apply -f istio-1.6.8/samples/httpbin/sample-client/fortio-deploy.yaml -n test

|

登入客户端pod并使用Fortio工具调用httpbin服务。-curl 参数表明发送一次调用

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

[root@kube-mas ~]# kubectl exec -it `kubectl get po -n test | grep fortio | awk '{print $1}'` -n test -c fortio -- /usr/bin/fortio load -curl http://httpbin.test.svc.cluster.local:8000/get

HTTP/1.1 200 OK

server: envoy

date: Mon, 31 May 2021 07:01:51 GMT

content-type: application/json

content-length: 662

access-control-allow-origin: *

access-control-allow-credentials: true

x-envoy-upstream-service-time: 82

{

"args": {},

"headers": {

"Content-Length": "0",

"Host": "httpbin.test.svc.cluster.local:8000",

"User-Agent": "fortio.org/fortio-1.11.3",

"X-B3-Parentspanid": "e6a2639be4f380bf",

"X-B3-Sampled": "1",

"X-B3-Spanid": "e108218c5b5aad9e",

"X-B3-Traceid": "d0c4888affd00713e6a2639be4f380bf",

"X-Envoy-Attempt-Count": "1",

"X-Forwarded-Client-Cert": "By=spiffe://cluster.local/ns/test/sa/httpbin;Hash=a459c7e970fba243b84a910e0500a2428c24f9c9a18bcba3cde46f34865aadd0;Subject=\"\";URI=spiffe://cluster.local/ns/test/sa/default"

},

"origin": "127.0.0.1",

"url": "http://httpbin.test.svc.cluster.local:8000/get"

}

|

触发熔断器

在DestinationRule配置中,定义了maxConnections: 1 和 http1MaxPendingRequests: 1。这些规则意味着,如果并发的连接和请求数超过一个,在istio-proxy进行进一步的请求和连接时,后续请求或连续将被阻止。

- 发送并发数为2的连接(-c 2),请求20次(-n 20)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

[root@kube-mas ~]# kubectl exec -it `kubectl get po -n test | grep fortio | awk '{print $1}'` -n test -c fortio -- /usr/bin/fortio load -c 2 -n 20 -qps 0 -loglevel Warning http://httpbin.test.svc.cluster.local:8000/get

07:05:16 I logger.go:127> Log level is now 3 Warning (was 2 Info)

Fortio 1.11.3 running at 0 queries per second, 2->2 procs, for 20 calls: http://httpbin.test.svc.cluster.local:8000/get

Starting at max qps with 2 thread(s) [gomax 2] for exactly 20 calls (10 per thread + 0)

Ended after 60.964372ms : 20 calls. qps=328.06

Aggregated Function Time : count 20 avg 0.0057703341 +/- 0.003037 min 0.002535254 max 0.01490974 sum 0.115406682

# range, mid point, percentile, count

>= 0.00253525 <= 0.003 , 0.00276763 , 20.00, 4

> 0.003 <= 0.004 , 0.0035 , 35.00, 3

> 0.004 <= 0.005 , 0.0045 , 50.00, 3

> 0.005 <= 0.006 , 0.0055 , 65.00, 3

> 0.006 <= 0.007 , 0.0065 , 75.00, 2

> 0.007 <= 0.008 , 0.0075 , 80.00, 1

> 0.008 <= 0.009 , 0.0085 , 85.00, 1

> 0.009 <= 0.01 , 0.0095 , 90.00, 1

> 0.01 <= 0.011 , 0.0105 , 95.00, 1

> 0.014 <= 0.0149097 , 0.0144549 , 100.00, 1

# target 50% 0.005

# target 75% 0.007

# target 90% 0.01

# target 99% 0.0147278

# target 99.9% 0.0148915

Sockets used: 2 (for perfect keepalive, would be 2)

Jitter: false

Code 200 : 20 (100.0 %)

Response Header Sizes : count 20 avg 230.05 +/- 0.2179 min 230 max 231 sum 4601

Response Body/Total Sizes : count 20 avg 892.05 +/- 0.2179 min 892 max 893 sum 17841

All done 20 calls (plus 0 warmup) 5.770 ms avg, 328.1 qps

这里可以看到。所有的请求都完成了,而且返回都都是状态码200.istio-proxy确实允许存在一些误差

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

|

[root@kube-mas ~]# kubectl exec -it `kubectl get po -n test | grep fortio | awk '{print $1}'` -n test -c fortio -- /usr/bin/fortio load -c 4 -n 40 -qps 0 -loglevel Warning http://httpbin.test.svc.cluster.local:8000/get

07:07:06 I logger.go:127> Log level is now 3 Warning (was 2 Info)

Fortio 1.11.3 running at 0 queries per second, 2->2 procs, for 40 calls: http://httpbin.test.svc.cluster.local:8000/get

Starting at max qps with 4 thread(s) [gomax 2] for exactly 40 calls (10 per thread + 0)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

07:07:06 W http_client.go:693> Parsed non ok code 503 (HTTP/1.1 503)

Ended after 130.035561ms : 40 calls. qps=307.61

Aggregated Function Time : count 40 avg 0.010719075 +/- 0.01278 min 0.000416368 max 0.053915132 sum 0.428763019

# range, mid point, percentile, count

>= 0.000416368 <= 0.001 , 0.000708184 , 22.50, 9

> 0.001 <= 0.002 , 0.0015 , 27.50, 2

> 0.002 <= 0.003 , 0.0025 , 37.50, 4

> 0.003 <= 0.004 , 0.0035 , 40.00, 1

> 0.004 <= 0.005 , 0.0045 , 47.50, 3

> 0.005 <= 0.006 , 0.0055 , 55.00, 3

> 0.007 <= 0.008 , 0.0075 , 57.50, 1

> 0.008 <= 0.009 , 0.0085 , 60.00, 1

> 0.01 <= 0.011 , 0.0105 , 67.50, 3

> 0.012 <= 0.014 , 0.013 , 70.00, 1

> 0.014 <= 0.016 , 0.015 , 77.50, 3

> 0.016 <= 0.018 , 0.017 , 80.00, 1

> 0.018 <= 0.02 , 0.019 , 87.50, 3

> 0.025 <= 0.03 , 0.0275 , 90.00, 1

> 0.03 <= 0.035 , 0.0325 , 92.50, 1

> 0.035 <= 0.04 , 0.0375 , 95.00, 1

> 0.04 <= 0.045 , 0.0425 , 97.50, 1

> 0.05 <= 0.0539151 , 0.0519576 , 100.00, 1

# target 50% 0.00533333

# target 75% 0.0153333

# target 90% 0.03

# target 99% 0.0523491

# target 99.9% 0.0537585

Sockets used: 25 (for perfect keepalive, would be 4)

Jitter: false

Code 200 : 18 (45.0 %)

Code 503 : 22 (55.0 %)

Response Header Sizes : count 40 avg 103.8 +/- 114.8 min 0 max 231 sum 4152

Response Body/Total Sizes : count 40 avg 534.25 +/- 324.2 min 241 max 893 sum 21370

All done 40 calls (plus 0 warmup) 10.719 ms avg, 307.6 qps

可以看到预期的熔断行为,只有45%的请求成功了,其余的均被熔断器拦截

|

查看istio-proxy状态查看更多熔断情况

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@kube-mas ~]# kubectl exec -it `kubectl get po -n test | grep fortio | awk '{print $1}'` -n test -c istio-proxy -- pilot-agent request GET stats | grep httpbin | grep pending

cluster.outbound|8000||httpbin.test.svc.cluster.local.circuit_breakers.default.rq_pending_open: 0

cluster.outbound|8000||httpbin.test.svc.cluster.local.circuit_breakers.high.rq_pending_open: 0

cluster.outbound|8000||httpbin.test.svc.cluster.local.upstream_rq_pending_active: 0

cluster.outbound|8000||httpbin.test.svc.cluster.local.upstream_rq_pending_failure_eject: 0

cluster.outbound|8000||httpbin.test.svc.cluster.local.upstream_rq_pending_overflow: 94

cluster.outbound|8000||httpbin.test.svc.cluster.local.upstream_rq_pending_total: 179

下面方式也可以查看,效果同上一样

[root@kube-mas ~]# kubectl exec -it `kubectl get po -n test | grep fortio | awk '{print $1}'` -n test -c istio-proxy -- sh -c 'curl http://localhost:15000/stats' | grep httpbin | grep pending

cluster.outbound|8000||httpbin.test.svc.cluster.local.circuit_breakers.default.rq_pending_open: 0

cluster.outbound|8000||httpbin.test.svc.cluster.local.circuit_breakers.high.rq_pending_open: 0

cluster.outbound|8000||httpbin.test.svc.cluster.local.upstream_rq_pending_active: 0

cluster.outbound|8000||httpbin.test.svc.cluster.local.upstream_rq_pending_failure_eject: 0

cluster.outbound|8000||httpbin.test.svc.cluster.local.upstream_rq_pending_overflow: 94

cluster.outbound|8000||httpbin.test.svc.cluster.local.upstream_rq_pending_total: 179

可以看到upstream_rq_pending_total总共请求179次,upstream_rq_pending_overflow的值为94,这意味着,到目前为止已有94个调用被标记为熔断

|

镜像

流量镜像,也称为影子流量,是一个以尽可能低的风险为生产带来变化的强大功能。镜像会将实时流量的副本发送到镜像服务。镜像服务发生在主服务的关键请求路径之外。

比如,下面将httpbin的所有流量全部路由到v1版本,然后执行规则将一部分流量镜像到v2版本

环境准备

1

2

3

4

5

6

7

8

9

|

实验开始之前,注意将上个实验所部署的全部清理掉

v1版本部署

[root@kube-mas ~]# kubectl apply -f istio-1.6.8/samples/httpbin/httpbin.yaml -n test

v2版本部署

[root@kube-mas ~]# cp istio-1.6.8/samples/httpbin/httpbin{,-v2}.yaml

[root@kube-mas ~]# sed -ri 's/(.*version: ).*/\1v2/' istio-1.6.8/samples/httpbin/httpbin-v2.yaml

[root@kube-mas ~]# vim istio-1.6.8/samples/httpbin/httpbin-v2.yaml

将资源deployment的name改为httpbin-v2

[root@kube-mas ~]# kubectl apply -f istio-1.6.8/samples/httpbin/httpbin-v2.yaml -n test

|

创建虚拟服务VirtualService将所有流量都路由到v1版本

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[root@kube-mas ~]# cat traffic/vs/httpbin-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: httpbin-vs

namespace: test

spec:

hosts:

- httpbin.test.svc.cluster.local

http:

- route:

- destination:

host: httpbin.test.svc.cluster.local

subset: v1

weight: 100

[root@kube-mas ~]# kubectl apply -f traffic/vs/httpbin-vs.yaml

|

创建对应的目标条目

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[root@kube-mas ~]# cat traffic/dr/httpbin-dr.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: httpbin-dr

namespace: test

spec:

host: httpbin.test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

[root@kube-mas ~]# kubectl apply -f traffic/dr/httpbin-dr.yaml

|

启动官方示例sleep服务当做客户端,进行流量测试

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

[root@kube-mas ~]# kubectl apply -f istio-1.6.8/samples/sleep/sleep.yaml -n test

[root@kube-mas ~]# kubectl exec -it -n test `kubectl get po -n test -l app=sleep -o jsonpath={.items..metadata.name}` -c sleep -- sh -c 'curl http://httpbin:8000/headers'

{

"headers": {

"Accept": "*/*",

"Content-Length": "0",

"Host": "httpbin:8000",

"User-Agent": "curl/7.76.1",

"X-B3-Parentspanid": "92d3e30e986559e3",

"X-B3-Sampled": "1",

"X-B3-Spanid": "6a072a12c6b17524",

"X-B3-Traceid": "6f2b44bb428fd1a092d3e30e986559e3",

"X-Envoy-Attempt-Count": "1",

"X-Forwarded-Client-Cert": "By=spiffe://cluster.local/ns/test/sa/httpbin;Hash=0d61ebfa0288cd15e68c277ae6d772c52d0fe3e6aecee44f3165ae53a54c2fed;Subject=\"\";URI=spiffe://cluster.local/ns/test/sa/sleep"

}

}

分别查看httpbin服务的v1和v2版本的pod日志,可以看到只有v1版本有,v2版本是没有的

v1版本

[root@kube-mas ~]# kubectl logs -n test `kubectl get po -n test -l app=httpbin,version=v1 -o jsonpath={.items..metadata.name}` -c istio-proxy --tail=5

2021-05-31T07:33:31.114086Z info sds resource:ROOTCA pushed root cert to proxy

2021-05-31T07:33:31.420193Z warning envoy filter [src/envoy/http/authn/http_filter_factory.cc:83] mTLS PERMISSIVE mode is used, connection can be either plaintext or TLS, and client cert can be omitted. Please consider to upgrade to mTLS STRICT mode for more secure configuration that only allows TLS connection with client cert. See https://istio.io/docs/tasks/security/mtls-migration/

2021-05-31T07:33:31.421609Z warning envoy filter [src/envoy/http/authn/http_filter_factory.cc:83] mTLS PERMISSIVE mode is used, connection can be either plaintext or TLS, and client cert can be omitted. Please consider to upgrade to mTLS STRICT mode for more secure configuration that only allows TLS connection with client cert. See https://istio.io/docs/tasks/security/mtls-migration/

2021-05-31T07:33:33.545997Z info Envoy proxy is ready

[2021-05-31T07:36:04.969Z] "GET /headers HTTP/1.1" 200 - "-" "-" 0 545 100 95 "-" "curl/7.76.1" "8fac3a5b-24bc-9137-bcba-430a9117fc9c" "httpbin:8000" "127.0.0.1:80" inbound|8000|http|httpbin.test.svc.cluster.local 127.0.0.1:51470 10.244.1.169:80 10.244.1.168:39992 outbound_.8000_.v1_.httpbin.test.svc.cluster.local default

v2版本

[root@kube-mas ~]# kubectl logs -n test `kubectl get po -n test -l app=httpbin,version=v2 -o jsonpath={.items..metadata.name}` -c istio-proxy --tail=5

2021-05-31T07:24:42.294674Z info cache Loaded root cert from certificate ROOTCA

2021-05-31T07:24:42.297105Z info sds resource:ROOTCA pushed root cert to proxy

2021-05-31T07:24:42.448945Z warning envoy filter [src/envoy/http/authn/http_filter_factory.cc:83] mTLS PERMISSIVE mode is used, connection can be either plaintext or TLS, and client cert can be omitted. Please consider to upgrade to mTLS STRICT mode for more secure configuration that only allows TLS connection with client cert. See https://istio.io/docs/tasks/security/mtls-migration/

2021-05-31T07:24:42.450383Z warning envoy filter [src/envoy/http/authn/http_filter_factory.cc:83] mTLS PERMISSIVE mode is used, connection can be either plaintext or TLS, and client cert can be omitted. Please consider to upgrade to mTLS STRICT mode for more secure configuration that only allows TLS connection with client cert. See https://istio.io/docs/tasks/security/mtls-migration/

2021-05-31T07:24:43.426725Z info Envoy proxy is ready

|

配置镜像流量到v2版本

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

[root@kube-mas ~]# cat traffic/vs/httpbin-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: httpbin-vs

namespace: test

spec:

hosts:

- httpbin.test.svc.cluster.local

http:

- route:

- destination:

host: httpbin.test.svc.cluster.local

subset: v1

weight: 100

mirror: #镜像

host: httpbin.test.svc.cluster.local

subset: v2 #镜像到哪个版本

mirror_percent: 100 #镜像流量百分比

[root@kube-mas ~]# kubectl apply -f traffic/vs/httpbin-vs.yaml

|

这个路由规则发送100%流量到v1,最后一段mirror表示将流量镜像到v2,当流量被镜像时,请求将发送到镜像服务中,并在headers中的HOST/Authority属性值追加 -shadow。例如cluster-1变为cluster-1-shadow

此外需要注意的是,这些被镜像的流量是“即发即弃”的,也就是说镜像请求的响应会被丢弃掉。

可以使用mirror_percent属性来设置镜像流量的百分比,而不是镜像全部请求。

发送请求测试流量是否镜像

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

[root@kube-mas ~]# kubectl exec -it -n test `kubectl get po -n test -l app=sleep -o jsonpath={.items..metadata.name}` -c sleep -- sh -c 'curl http://httpbin:8000/headers'

查看v1版本和v2版本的pod,可以发现都有看到日志记录

v1版本

[root@kube-mas ~]# kubectl logs -n test `kubectl get po -n test -l app=httpbin,version=v1 -o jsonpath={.items..metadata.name}` -c istio-proxy --tail=5

2021-05-31T07:33:31.420193Z warning envoy filter [src/envoy/http/authn/http_filter_factory.cc:83] mTLS PERMISSIVE mode is used, connection can be either plaintext or TLS, and client cert can be omitted. Please consider to upgrade to mTLS STRICT mode for more secure configuration that only allows TLS connection with client cert. See https://istio.io/docs/tasks/security/mtls-migration/

2021-05-31T07:33:31.421609Z warning envoy filter [src/envoy/http/authn/http_filter_factory.cc:83] mTLS PERMISSIVE mode is used, connection can be either plaintext or TLS, and client cert can be omitted. Please consider to upgrade to mTLS STRICT mode for more secure configuration that only allows TLS connection with client cert. See https://istio.io/docs/tasks/security/mtls-migration/

2021-05-31T07:33:33.545997Z info Envoy proxy is ready

[2021-05-31T07:36:04.969Z] "GET /headers HTTP/1.1" 200 - "-" "-" 0 545 100 95 "-" "curl/7.76.1" "8fac3a5b-24bc-9137-bcba-430a9117fc9c" "httpbin:8000" "127.0.0.1:80" inbound|8000|http|httpbin.test.svc.cluster.local 127.0.0.1:51470 10.244.1.169:80 10.244.1.168:39992 outbound_.8000_.v1_.httpbin.test.svc.cluster.local default

[2021-05-31T07:45:55.946Z] "GET /headers HTTP/1.1" 200 - "-" "-" 0 545 21 21 "-" "curl/7.76.1" "53294664-a843-90c6-97a1-0aa21ce80bdc" "httpbin:8000" "127.0.0.1:80" inbound|8000|http|httpbin.test.svc.cluster.local 127.0.0.1:39836 10.244.1.169:80 10.244.1.168:56586 outbound_.8000_.v1_.httpbin.test.svc.cluster.local default

v2版本

[root@kube-mas ~]# kubectl logs -n test `kubectl get po -n test -l app=httpbin,version=v2 -o jsonpath={.items..metadata.name}` -c istio-proxy --tail=5

2021-05-31T07:24:42.297105Z info sds resource:ROOTCA pushed root cert to proxy

2021-05-31T07:24:42.448945Z warning envoy filter [src/envoy/http/authn/http_filter_factory.cc:83] mTLS PERMISSIVE mode is used, connection can be either plaintext or TLS, and client cert can be omitted. Please consider to upgrade to mTLS STRICT mode for more secure configuration that only allows TLS connection with client cert. See https://istio.io/docs/tasks/security/mtls-migration/

2021-05-31T07:24:42.450383Z warning envoy filter [src/envoy/http/authn/http_filter_factory.cc:83] mTLS PERMISSIVE mode is used, connection can be either plaintext or TLS, and client cert can be omitted. Please consider to upgrade to mTLS STRICT mode for more secure configuration that only allows TLS connection with client cert. See https://istio.io/docs/tasks/security/mtls-migration/

2021-05-31T07:24:43.426725Z info Envoy proxy is ready

[2021-05-31T07:45:55.958Z] "GET /headers HTTP/1.1" 200 - "-" "-" 0 585 10 10 "10.244.1.168" "curl/7.76.1" "53294664-a843-90c6-97a1-0aa21ce80bdc" "httpbin-shadow:8000" "127.0.0.1:80" inbound|8000|http|httpbin.test.svc.cluster.local 127.0.0.1:39838 10.244.1.167:80 10.244.1.168:0 outbound_.8000_.v2_.httpbin.test.svc.cluster.local default

|